SeqSLAM++: View-based Robot Localization and Navigation

- Shuji Oishi

- Yohei Inoue

- Jun Miura

- Shota Tanaka

Robotics and Autonomous Systems 2019

Abstract

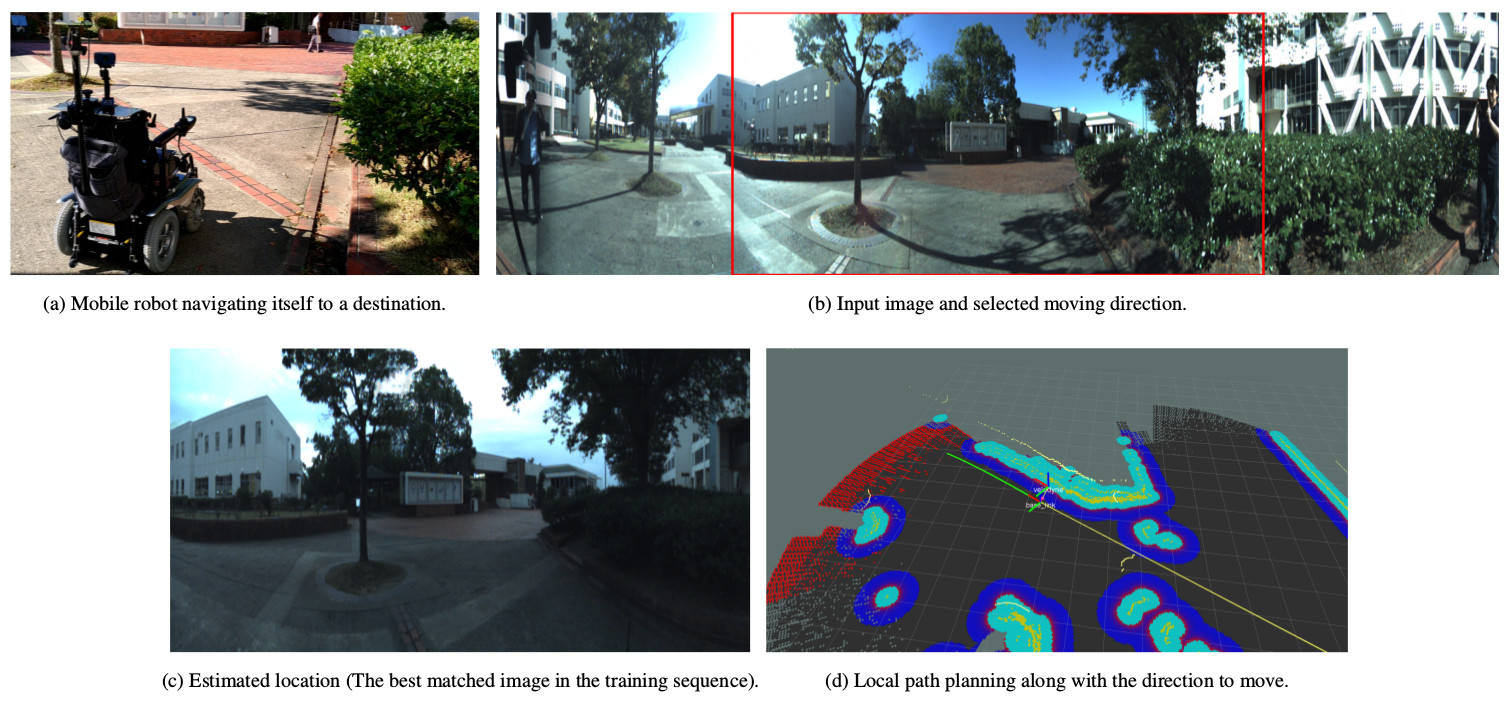

This paper presents a new approach to view-based localization and navigation in outdoor environments, which are indispensable functions for mobile robots. Several approaches have been proposed for autonomous navigation. GPS-based systems are widely used especially in the case of automobiles, however, they can be unreliable or non-operational near tall buildings. Localization with a precise 3D digital map of the target environment also enables mobile robots equipped with range sensors to estimate accurate poses, but maintaining a large-scale outdoor map is often costly. We have therefore developed a novel view-based localization method "SeqSLAM++" by extending the conventional SeqSLAM in order not only to robustly estimate the robot position comparing image sequences but also to cope with changes in a robot's heading and speed as well as view changes using wide-angle images and a Markov localization scheme. According to the direction to move provided by the SeqSLAM++, the local-level path planner navigates the robot by setting subgoals repeatedly considering the structure of the surrounding environment using a 3D LiDAR. The entire navigation system has been implemented in the ROS framework, and the effectiveness and accuracy of the proposed method was evaluated through off-line/on-line navigation experiments.