L-C*: Visual-inertial Loose Coupling for Resilient and Lightweight Direct Visual Localization

- Shuji Oishi

- Kenji Koide

- Masashi Yokozuka

- Atsuhiko Banno

2023 IEEE International Conference on Robotics and Automation (ICRA2023)

Abstract

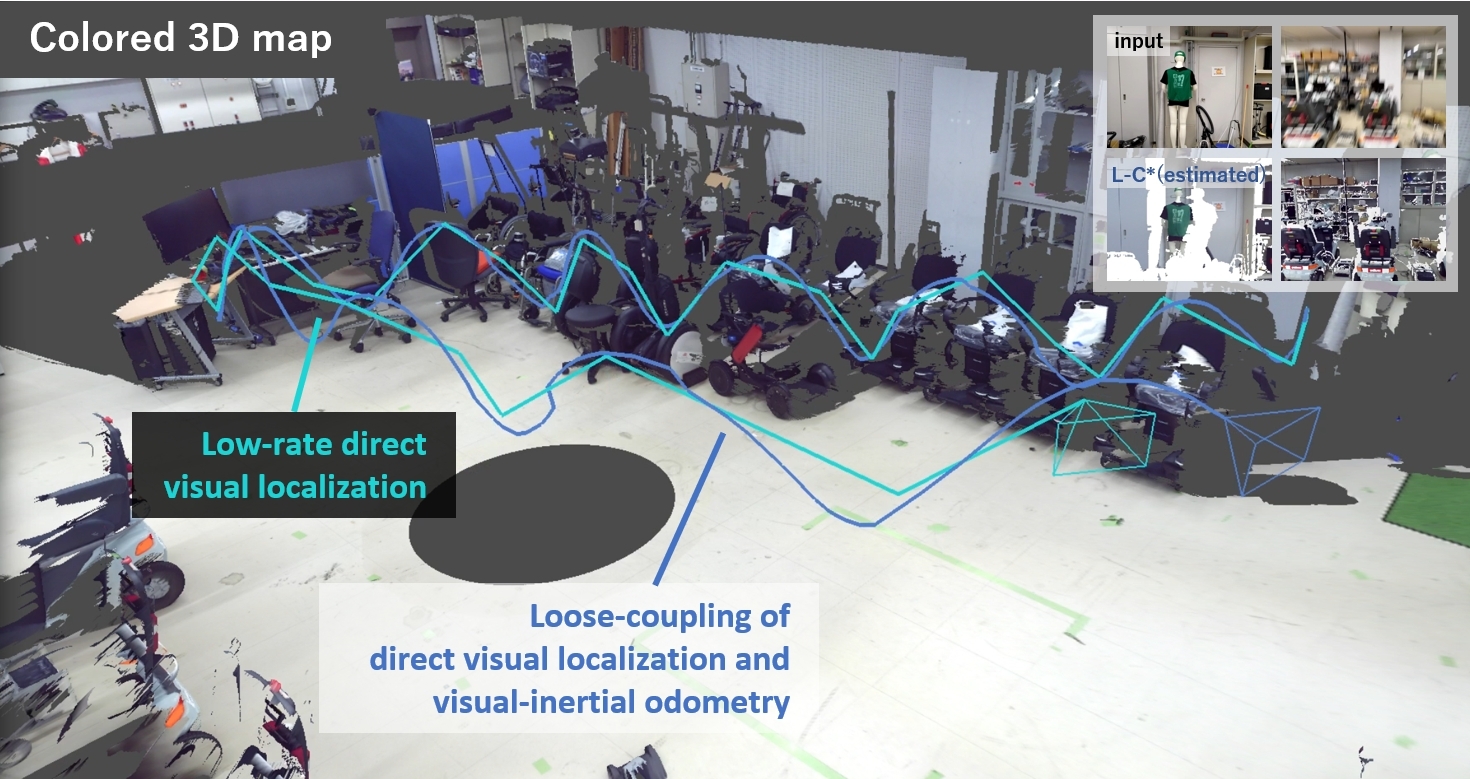

This study presents a framework, L-C*, for resilient and lightweight direct visual localization, employing a loosely coupled fusion of visual and inertial data. Unlike indirect methods, direct visual localization facilitates accurate pose estimation on general color three-dimensional maps that are not tailored for visual localization. However, it suffers from temporal localization failures and high computational costs for real-time applications. For long-term and real-time visual localization, we developed an L-C* that incorporates direct visual localization C* in a visual-inertial loose coupling. By capturing ego-motion via visual-inertial odometry to interpolate global pose estimates, the framework allows for a significant reduction in the frequency of demanding global localization, thereby facilitating lightweight but reliable visual localization. In addition, forming a closed loop that feeds the latest pose estimate to the visual localization component as an initial guess for the next pose inference renders the system highly robust. A quantitative evaluation of a simulation dataset demonstrated the accuracy and efficiency of the proposed framework. Experiments using smartphone sensors also demonstrated the robustness and resiliency of L-C* in real-world situations.

Paper

L-C*: Visual-inertial Loose Coupling for Resilient and Lightweight Direct Visual Localization

Shuji Oishi, Kenji Koide, Masashi Yokozuka, Atsuhiko Banno

2023 IEEE International Conference on Robotics and Automation (ICRA2023), pp.4033-4039, London, UK, 29 May - 2 June, 2023

pdf

bibtex

project

doi