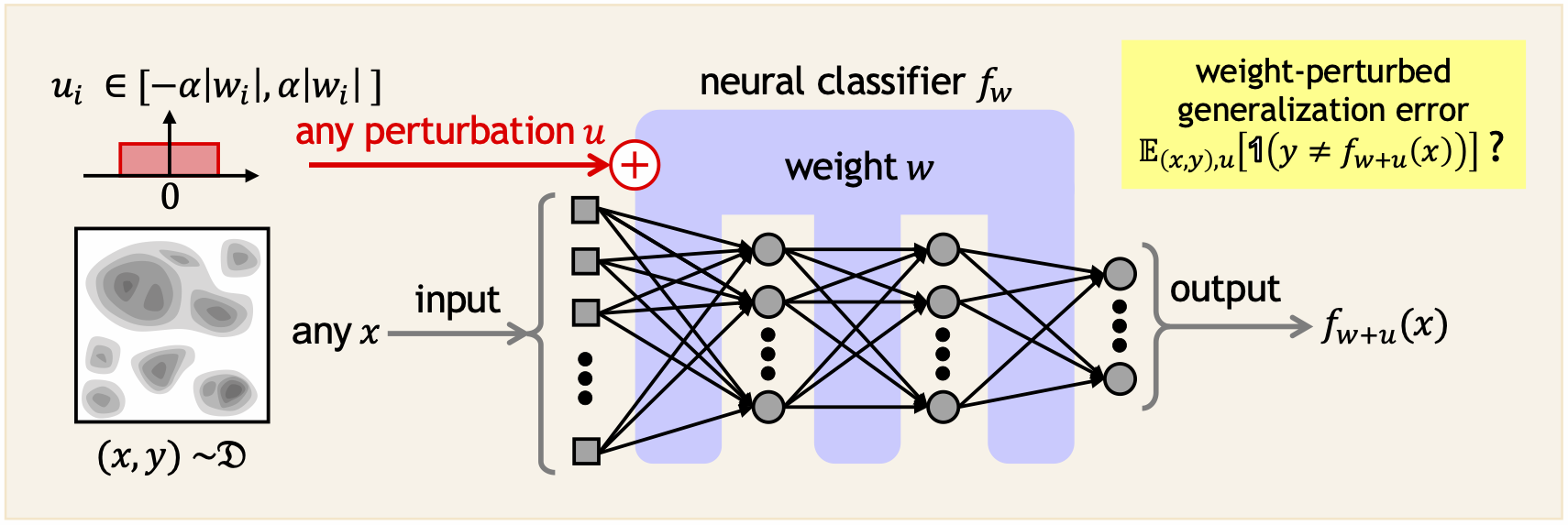

The "WP-GEB" in the tool name WP-GEB-Estimator-2 stands for weight-perturbed generalization bounds and WP-GEB-Estimator-2 is a tool for estimating the upper bounds of weight-perturbed generalization errors and the upper bounds of weight-perturbed generalization risks of neural classifiers (hereafter called just classifiers). Weight-perturbations represent perturbation added to weight-parameters of classifiers, and weight-perturbed generalization errors are the expected values of the misclassification-rates of classifiers for any input and any perturbation, where the input and the perturbation are sampled according to distributions. On the other hand, weight-perturbed generalization risks are the expected values of the existence rates of risky data , for which the misclassification-rates due to perturbations (i.e., the existence rate of adversarial weight-perturbations) exceeds the acceptable threshold. Weight-perturbed generalization errors are effective for evaluating robustness against random perturbations (e.g., natural noise), while weight-perturbed generalization risks are effective for evaluating robustness against worst-case perturbations (e.g., adversarial attacks). The definitions of weight-perturbed generalization error bounds and the details of WP-GEB-Estimator-2 are explained in the WP-GEB-Estimator-2 User Manual ( English version [PDF:186KB] and Japanese version [PDF:346KB] ).

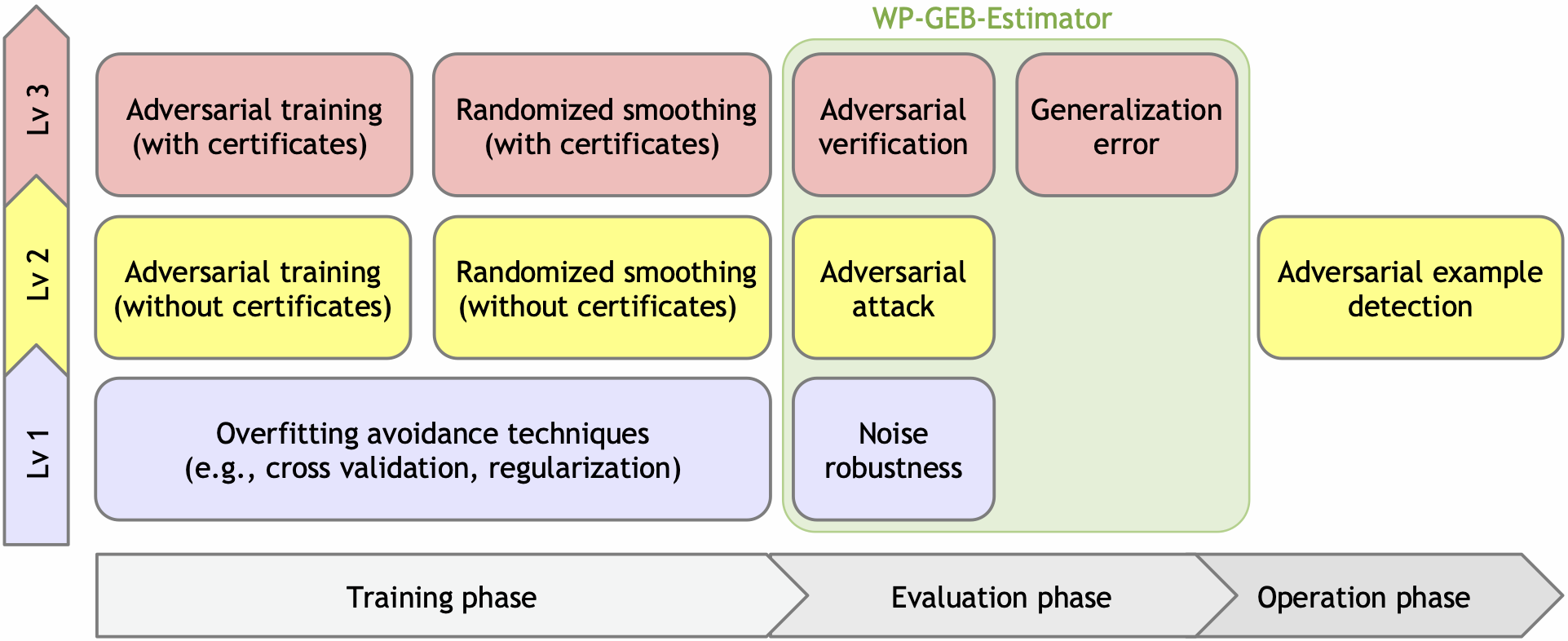

Machine Learning Quality Management (MLQM) Guideline has been developed to clearly explain the quality of various industrial products including statistical machine learning. This guideline defines an internal quality property, called the stability of trained models, which represents that trained-models reasonably behave even for unseen input data. Figure 1 shows the techniques to evaluate and improve stability for each phase and each level, which is Figure 14 in the MLQM-Guideline ver.4 (The version 3 has already been available from this site and the version 4 will be published soon from this site). WP-GEB-Estimator-2 is a useful tool for evaluating the stability of trained classifiers in the evaluation phase because it has the following functions:

- [Noise robustness] It can measure misclassification-rates of randomly weight-perturbed classifiers for a test-dataset and a perturbation sample.

- [Adversarial attack] It can search for adversarial weight-perturbations for each input in a test-dataset.

- [Adversarial verification] It can statistically estimate, with probabilistic confidence, an upper bound on the weight-perturbed generalization risk for any input.

- [Generalization error] It can statistically estimate, with probabilistic confidence, an upper bound on the weight-perturbed generalization error for any input.

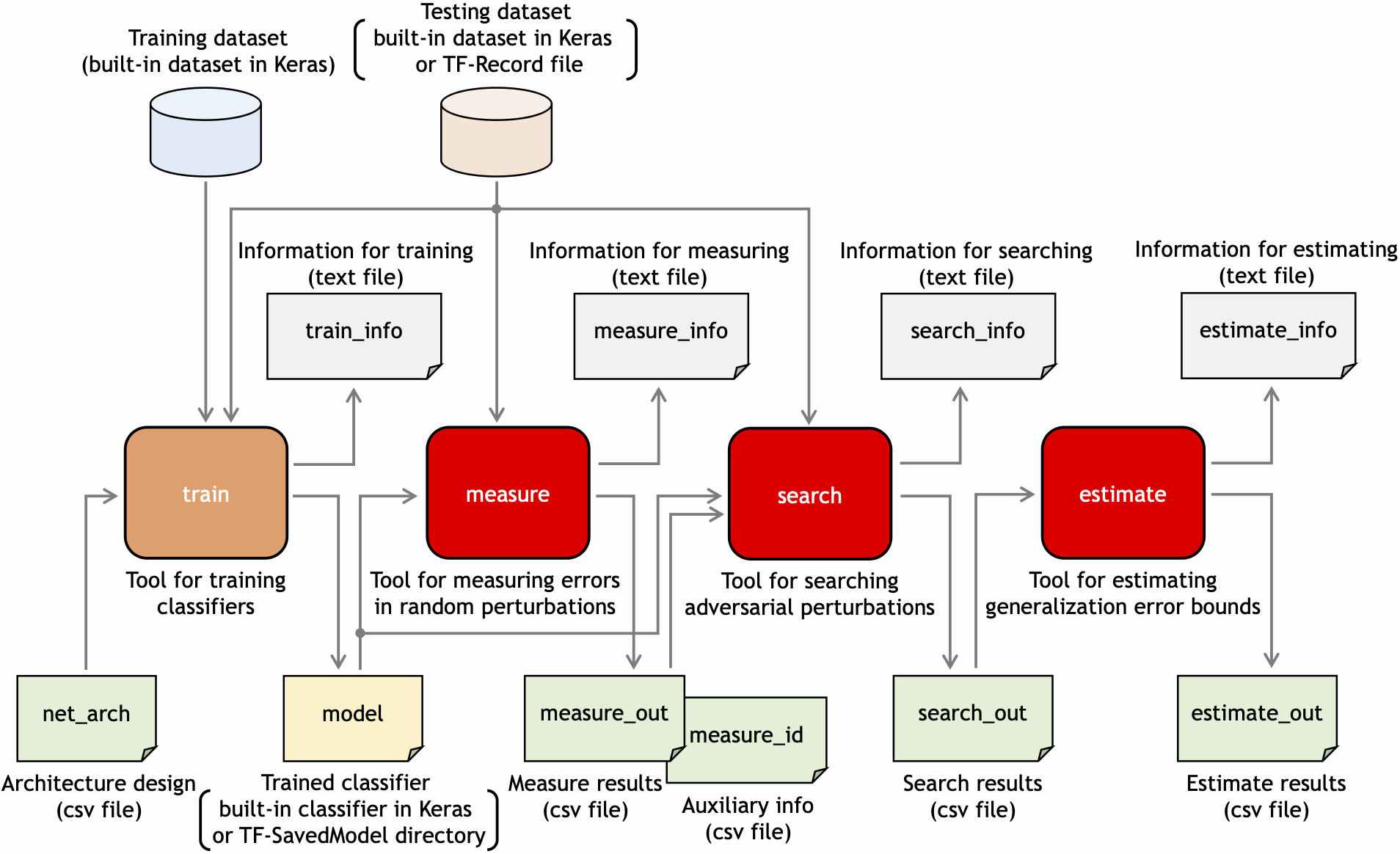

WP-GEB-Estimator consists of the four tools for training, searching, measuring, and estimating. These tools are described in the Python language with the TensorFlow/Keras libraries.

- train:

trains classifiers to demonstrate WP-GEB-Estimator. - Input: Train-dataset, Network architecture (CSV format), etc

- Output: Trained classifier (TensorFlow-SavedModel format), etc

- search:

searches for adversarial weight-perturbations in trained classifiers. - Input: Test-dataset, Trained classifier (SavedModel format), Perturbation-ratios, etc

- Output: Adversarial search results (CSV format) including input information

- measure:

measures misclassification-errors of trained classifiers for random weight-perturbations. - Input: Test-dataset, Trained classifier, Adversarial search results (CSV format), etc

- Output: Measurement results (CSV format) including adversarial search results

- estimate:

estimates weight-perturbed generalization risk/error bounds. - Input: Measurement results including search results (CSV format), etc

- Output: Estimated results of weight-perturbed generalization bounds (CSV format), etc

Figure 2. The relation between in/output files of the 4 tools that constitute WP-GEB-Estimator-2

WP-GEB-Estimator is described in the Python language with the libraries TensorFlow and NumPy. The software versions used in the development are Python 3.10.16, TensorFlow 2.16.2, Keras 3.6.0, and NumPy 1.26.4. Refer to the attached file, requirements.txt for more details.

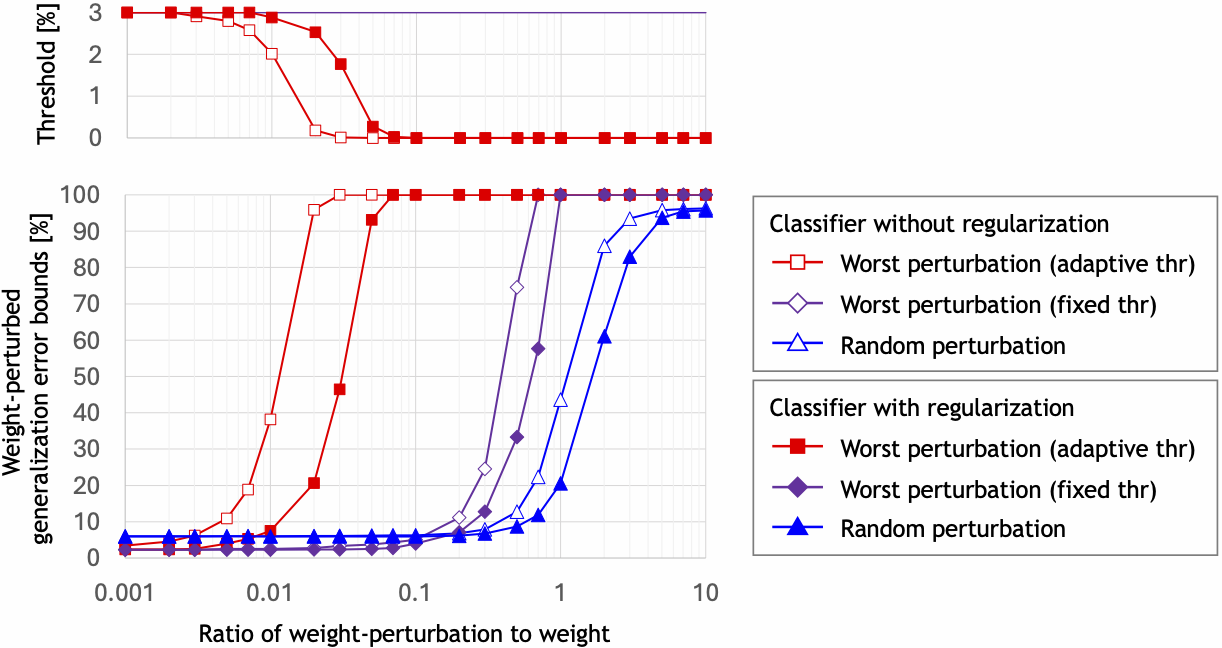

Figure 3 shows the graph of the estimated results by WP-GEB-Estimator-2 and they are the weight perturbed generalization risk/error bounds (confidence: 90%) and the generalization acceptable thresholds, for the classifier without regularization and the classifier with the L2-regularization coefficient 0.001. The graph was drawn by the spreadsheet-software Microsoft Excel from the estimate_out.csv. The horizontal axis represents the ratio of weight-perturbation to weight. Figure 3 explicitly shows that the classifier with regularization is more robust for weight-perturbations than the classifier without regularization, even though there is no difference between their errors when no perturbation is added. In addition, the weight-perturbed generalization risk bounds with search start increasing at the ratio that is two orders less than the ratio at which weight-perturbed generalization error bounds start increasing.

Figure 3. The estimated results of weight-perturbed generalization risks/errors.

Following the ideas of open source software, we allow anyone to use WP-GEB-Estimator-2 without fee, under the Apache License Version 2.0. You have to agree with the license before using WP-GEB-Estimator-2.

The source code and the user manual of WP-GEB-Estimator-2 are available.

- The Python source code of WP-GEB-Estimator-2 can be download:

- WP-GEB-Estimator-2 User Manual can be downloaded:

- Yoshinao Isobe, Estimating Generalization Error Bounds for Worst Weight-Perturbed Neural Classifiers (written in Japanese), The 38th Annual Conference of the Japanese Society for Artificial Intelligence (JSAI), 2024. [Abstract] [PDF: 630KB] (this result was estimated by WP-GEB-Estimator-1.)

- Yoshinao Isobe, Probabilistic safety certification of neural classifiers against adversarial perturbations (written in Japanese), AIST Cyber-Physical Security Research Symposium, 2025. [PDF: 671KB] (this result was estimated by WP-GEB-Estimator-2.)

This work was supported by the project

JPNP20006

commissioned by

NEDO.

I would also like to express my gratitude to the members of the joint research project with

Hitachi, Ltd.

for their valuable advice on this work.

Yoshinao Isobe

Cyber Physical Security Research Center

National Institute of Advanced Industrial Science and Technology (AIST), Japan

Cyber Physical Security Research Center

National Institute of Advanced Industrial Science and Technology (AIST), Japan