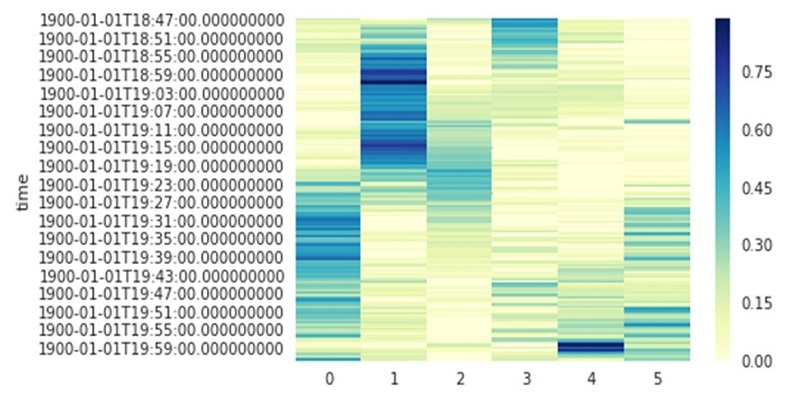

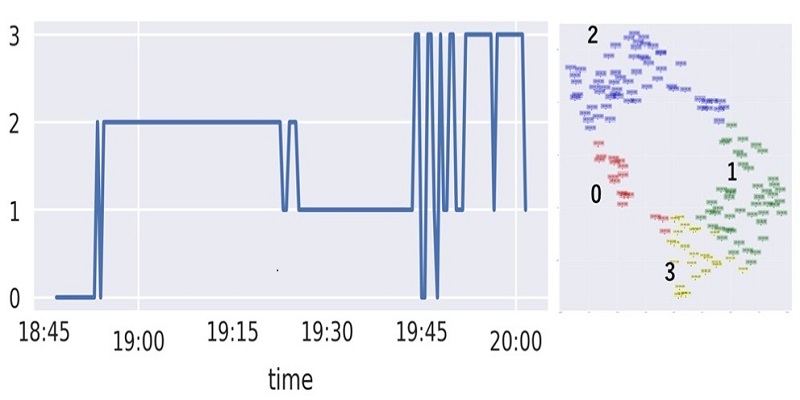

Sensing, Modeling, and Visualizing Pedagogical Environments

CREST Symbiotic Interaction Research Group is advancing technologies of sensing, modeling, and visualizing sounds of learners' interactions and surrounding environments. Currently, we are focusing on the development of two systems: a visualization system of human interactions using a microphone array and a mobile auditory sensing system using smartwatches. These developments are conducted in "Pedagogical information infrastructure based on mutual interaction, Symbiotic Interaction, CREST."