|

Image Capture by Video4Linux@- Why

don't you start computer vision research ?

Image Capture by Video4Linux@- Why

don't you start computer vision research ?

@Capturing image is the first step to construct a vision system.

There are many ways to capture image, e.g., using video capture

device for PCI bus, USB and IEEE1394 etc. I think video input

is still standard, I show the overview of image capture using

capture device and Video4Linux

(V4L) here. You can download a sample program. Using the program,

you can start video processing fairly easily. I borrow V4L that

is created by sincere efforts of many people to make this page. I

really appreciate it.

| yThings

you needz

|

|

Things you need to start image capture

using V4L are

|

|

PC with Linux

|

|

Capture device with Bt848

or Bt878 (Bt8x8 is a capture chip)

|

|

Video camera or player

|

|

|

|

yEquipment I

usedz

|

|

To choose equipment sometime

makes us confuse. I used low cost capture

device and video camera you may find in

your home.

|

|

PCFPentium850MHz,Memory512MBALinux:

Vine Linux 2.1CR

|

|

Capture deviceF IO-DATA

GV-VCP2M/PCI

|

|

Video cameraFSony HandyCam

DCR-PC100

|

|

The performance of ob influences

computational speed, but older PC with less

memory can be used. Above capture device

may not be available out of Japan, but you

can use any capture device with Bt848 or

Bt878. You may find devices from the sites

in the last of this page. The cost may 50

USD

to 100 USD. Every video camera with video

output can be used. If you don't have it

you can use video or DVD player (like

PlayStation 2), but you can process only

recorded materials.

|

|

|

yInstallation of device

driverz

|

|

After you install capture device

into PCI bus, device driver controlling the

capture device should be installed. An

example of common procedure is as follows:

|

|

(1) Find driver modules: i2c.o,

videodev.o, bttv.o. You may

find them in /lib/modules/2.2.x/mics(2.2.x

is a version of kernel). You can

use command "find" from root directory

to find them.

|

|

(2) Confirm device file. If there

is no device file, e.g., /dev/video, make

it using "mknod".

|

|

(3) Install modules by /sbin/insmod.

|

|

(4) Confirm installation

by "dmesg".

|

|

If you use IO-DATA GV-VCP2M/PCI,

you should use the option of card=2

to install bttv.o: /sbin/insmod

bttv.o card=2. The capture device

is recognized as "Hauppauge

old". If you don't use

this option, second composite input

cannot be used. If you are working on Linux

with kernel 2.4.x, i2c.o may be divided

into 3 modules like i2c-core.o,

i2c-dev.o, i2c-algo-bit.o. It

is convenient to add the command for installing

the models in /etc/rc.d/rc.local for the

case of rebooting.

|

|

|

yCapture testz

|

|

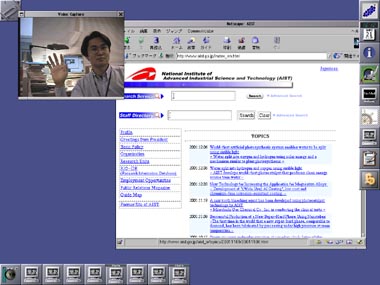

You can test capture device using

distributed software like xawtv.

I prepare the following sample program to

construct vision system.

|

|

show_video_v4l.tar.gz

|

|

Uncompress the file in a directory you

use. Change default device file, /dev/video,

in capture_v4l.h for your system. Mesa and

glut must be installed to link executable

file. They may be included in the recent

distribution like Red Hat, but you can download

them from the sites in links blow. If you

succeed, you can find show_video_v4l

in the directory. Use the options -v0(composite1)

-v1icomposite2j -v2iS inputj according to the input

channel you use (this option may not be

used to other capture devices. Default is

S input). You may see 320x240 video window

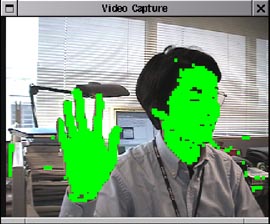

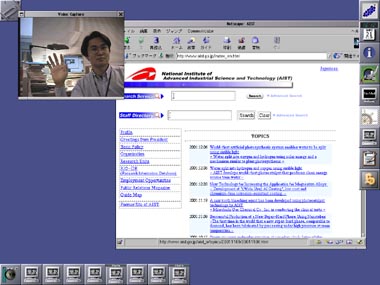

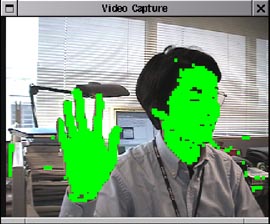

on X window (left figure). Option

-s enables color extractor based on

simple thresholding. Extracted pixels are

shown by green. As you can see in right figure, they

almost correspond to skin of Japanese. Push

a left button of mouse on the window to

end the program.

|

|

@You may understand the overview

of programming using V4L, Mesa

and glut by the contents of sites in links

below and source code of the sample.

The first step to your own vision system

may be to replace the color extractor

of the sample by processing you need.

|

|

|

|

|

|

|

![]() Image Capture by Video4Linux@- Why

don't you start computer vision research ?

Image Capture by Video4Linux@- Why

don't you start computer vision research ?