On this web page, we provide the subjective music similarity evaluation results obtained in our experiments described in papers [1] and [2]. This data was collected for the purpose of developing an estimation method which can detect subjective similarity between pieces of music.

Data Collection

Subjects

A total of 27 subjects participated in the experiment (13 male and 14 female).

All of the subjects were in their twenties.

Songs used

A total of 80 songs were used in the experiment, which were obtained from the RWC music database (Popular music) [3], which includes 100 songs.

The songs used were all Japanese popular music (J-Pop) songs, and songs number 1 through 80 were used.

The length of the recordings used was 30 seconds, beginning from the starting point of the first chorus section.

The RWC music database was annotated (AIST annotation [4]) and this annotation was used to obtain the starting point of the chorus sections.

Experimental Procedure

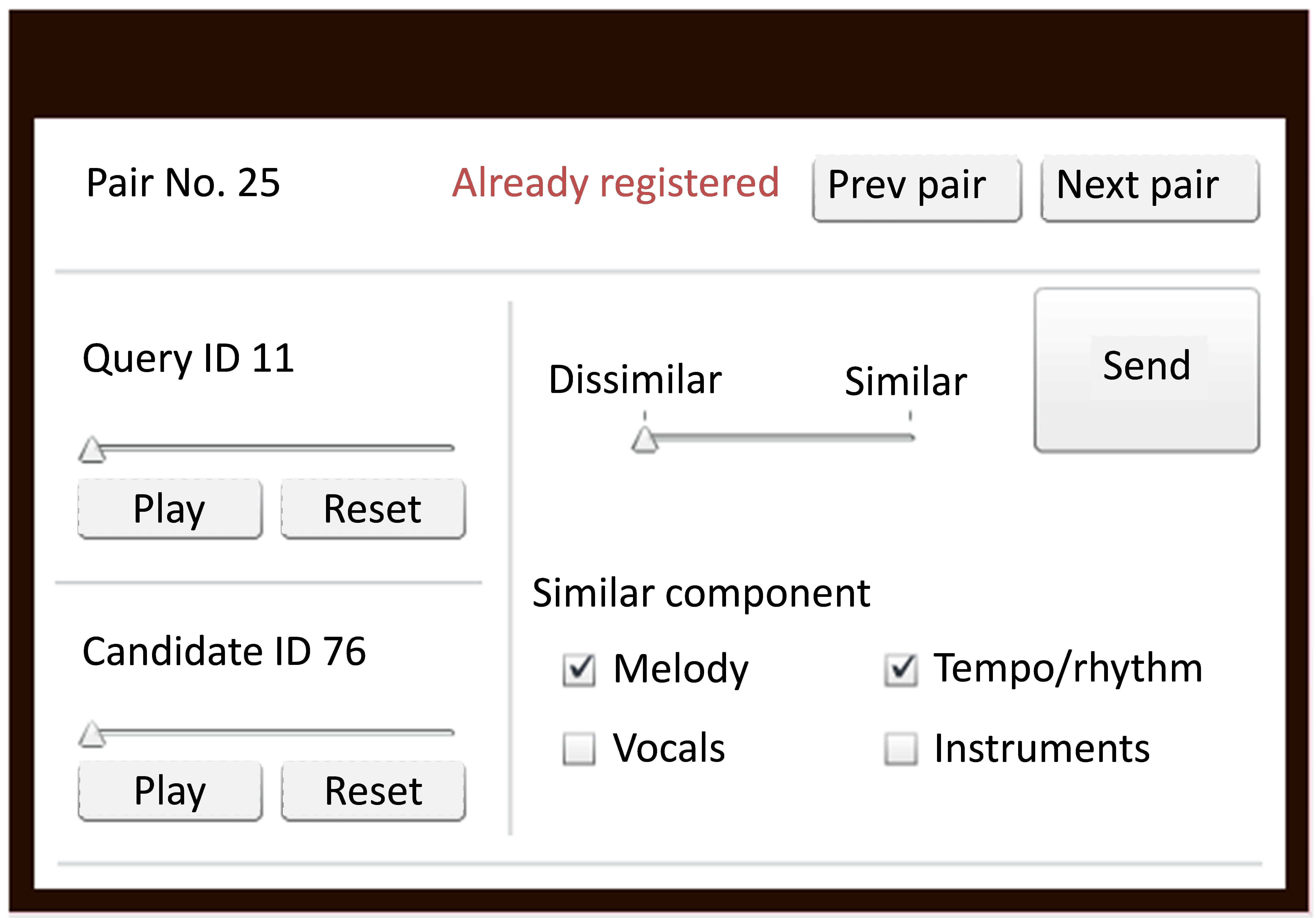

A subjective similarity data collecting system that works on a web browser was created for the experiment (see figure below).

First, the system presented two songs to a subject (a ‘query’ and a ‘candidate’ song), which we referred to as a “pair”.

Pairs were randomly chosen from among 200 pairs selected before the experiment for each trial, and ‘query’ and ‘candidate’ designations were randomly applied to the two songs in each pair.

Each subject indicated whether the pair was similar or dissimilar overall, and then decided whether each musical component (e.g., melody, tempo/rhythm, vocal, instruments) of the songs was similar or not.

For example, if the subject felt a pair was dissimilar, but felt that the melody and tempo/rhythm were similar, the subject chose ‘dissimilar’ and checked ‘melody’ and ‘tempo/rhythm’ as similar components on the interface.

On the other hand, if the subject felt a pair was similar and also thought ‘tempo/rhythm’ and ‘instruments’ were similar, the subject chose ‘similar’ and checked ‘tempo/rhythm’ and ‘instruments’ on the interface.

During the experiment, each subject repeated this process 200 times with 200 different pairs of songs.

The subjects could replay the songs and re-evaluate the pair repeatedly if necessary.

Time allowed for the experiment was 200 minutes (50 minutes × 4 sets).

Subjects rested for 10 minutes between sets.

Similarity Evaluation Results

- Similarity evaluation results ( SubjectiveEvaluationResults.csv )

- Songs used for data collection ( RWC_JPop(chorus).zip ) [You will be asked to enter the original user ID and password that you have already received to download Standard MIDI Files (SMF). This file cannot be redistributed.]

SubjectiveEvaluationResults.csv is composed of 5400 rows and 8 columns. Each row corresponds to a subjective evaluation trial. Each column corresponds to the following:

- Subject ID

- Query song ID

- Candidate song ID

- Results of similarity evaluation (0:dissimilar, 1:similar)

- Results of melody similarity evaluation (0:dissimilar, 1:similar)

- Results of tempo/rhythm similarity evaluation (0:dissimilar, 1:similar)

- Results of vocals similarity evaluation (0:dissimilar, 1:similar)

- Results of instruments similarity evaluation (0:dissimilar, 1:similar)

RWC_Jpop(chorus).zip contains the songs used for data collection. They are in .wav format. Each song is labeled by song ID (corresponding to query song ID and candidate song ID in SubjectiveEvaluationResults.csv).

References

- [1]

- Shota Kawabuchi, Chiyomi Miyajima, Norihide Kitaoka, and Kazuya Takeda

Subjective similarity of music: data collection for individuality analysis.

Asia Pacific Signal and Information Processing Association, Hollywood, CA, USA, pp. 1-5, December 2012. - [2]

- Shota Kawabuchi, Chiyomi Miyajima, Norihide Kitaoka, and Kazuya Takeda

Data collection for individuality analysis of subjective music similarity.

Transactions of Information Processing Society of Japan, Vol.54 No.4, pp. 1-12, April 2013 (in Japanese). - [3]

- Masataka Goto, Hiroki Hashiguchi, Takuichi Nishimura, and Ryuichi Oka

RWC Music Database: Popular, classical and jazz music databases.

Proceedings of the 3rd International Conference on Music Information Retrieval, Paris, France, pp. 287-288, October 2002.

AIST Annotation for the RWC Music Database.

Proceedings of the 7th International Conference on Music Information Retrieval, Victoria, Canada, pp.359-360, October 2006.

Links

- Shota Kawabuchi, Nagoya University Graduate School of Information Science

- Prof. Kazuya Takeda, Nagoya University Graduate School of Information Science

- Dr. Masataka Goto, Media Interaction Group, National Institute of Advanced Industrial Science and Technology (AIST)

- RWC Music Database

- AIST Annotation for the RWC Music Database