Wearable Behavior Navigation System

Last Update:2017.April.3

What's Behavior Navigation System

Cellular phones with Global Positioning System (GPS) navigation functions are popular now. Wearable devices are available to help induce the desirable direction of human walking. Furthermore, not only the navigation of human walking, but also the navigation of general human behavior is expected to be realized in the future. As for ordinary behavior, a person does not need the navigation by other persons. However, if he/she is standing next to an injured/ill person, he/she needs the instruction of first aid treatment by an expert of first aid treatment. In order to realize general human behavior navigation, we proposed and developed the wearable Behavior Navigation System (BNS) using Augmented Reality (AR)/Mixted Reality (MR) technology.

This Video archived at the UNESCO website shows the concept of the WBNS.

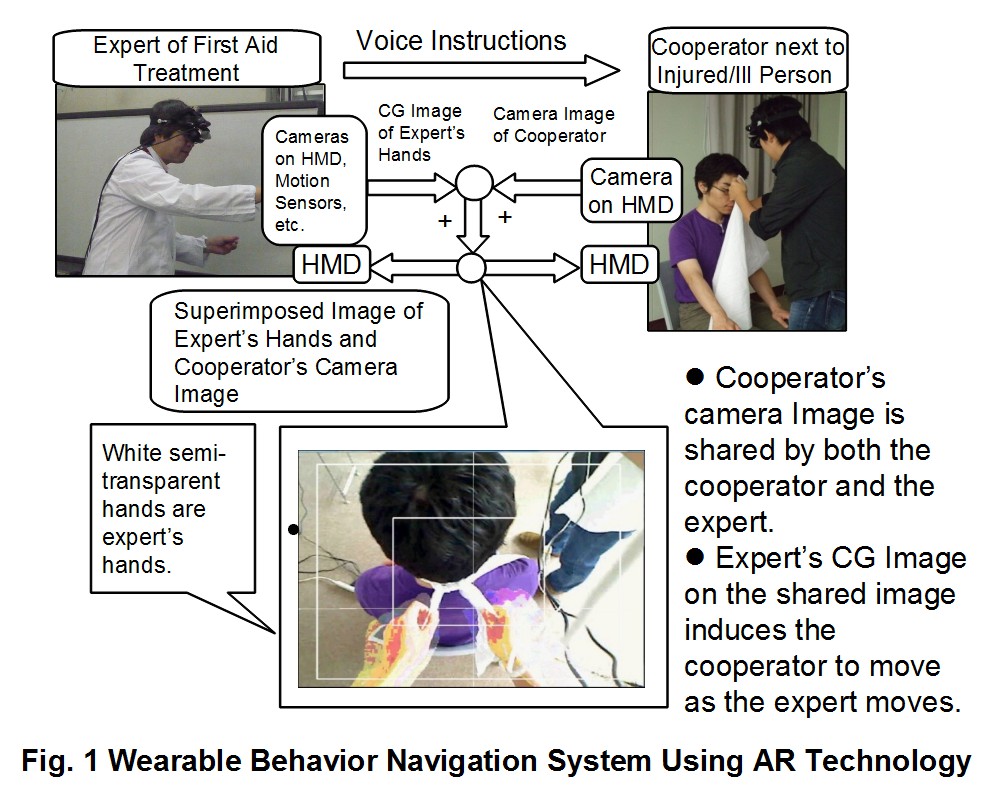

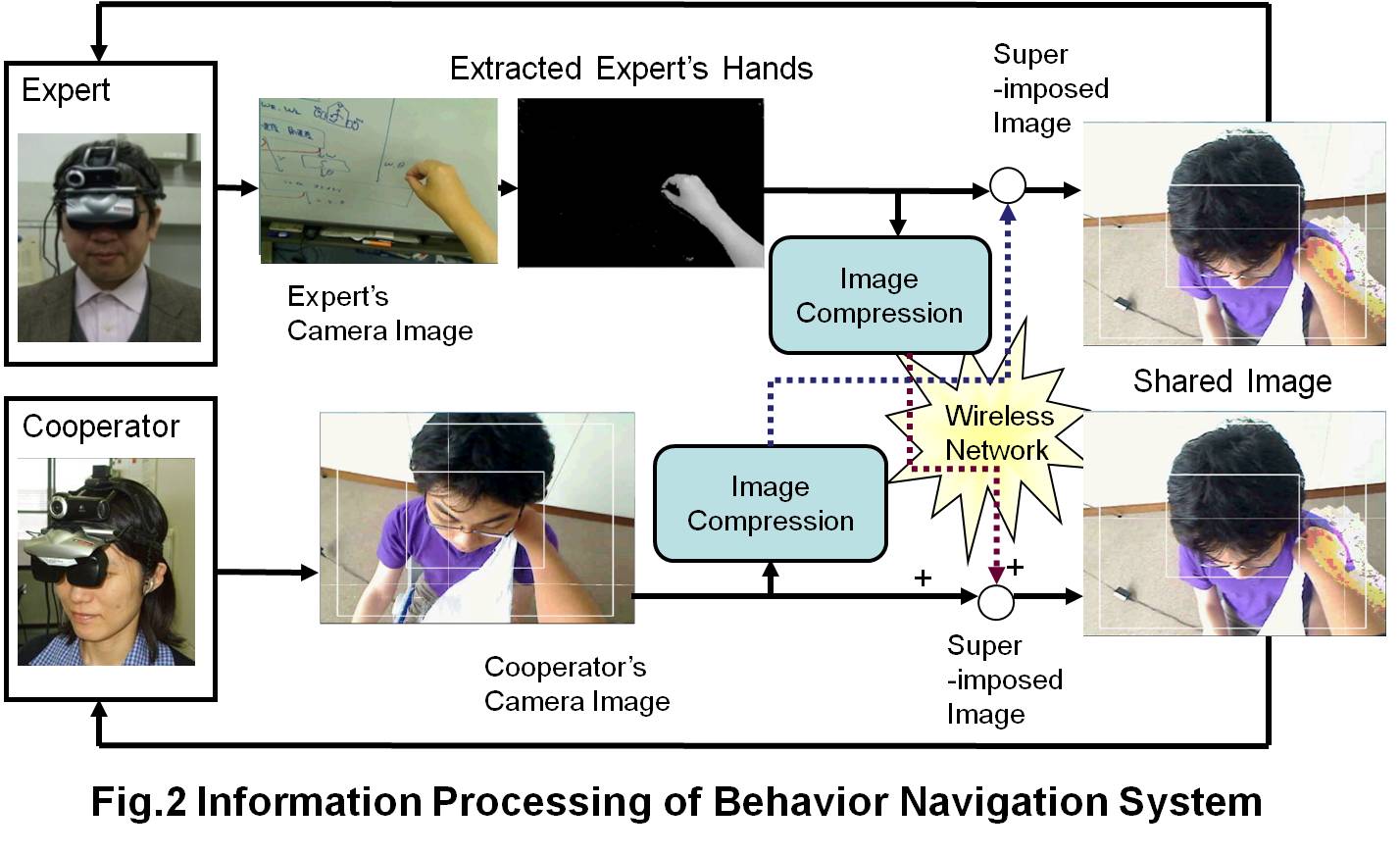

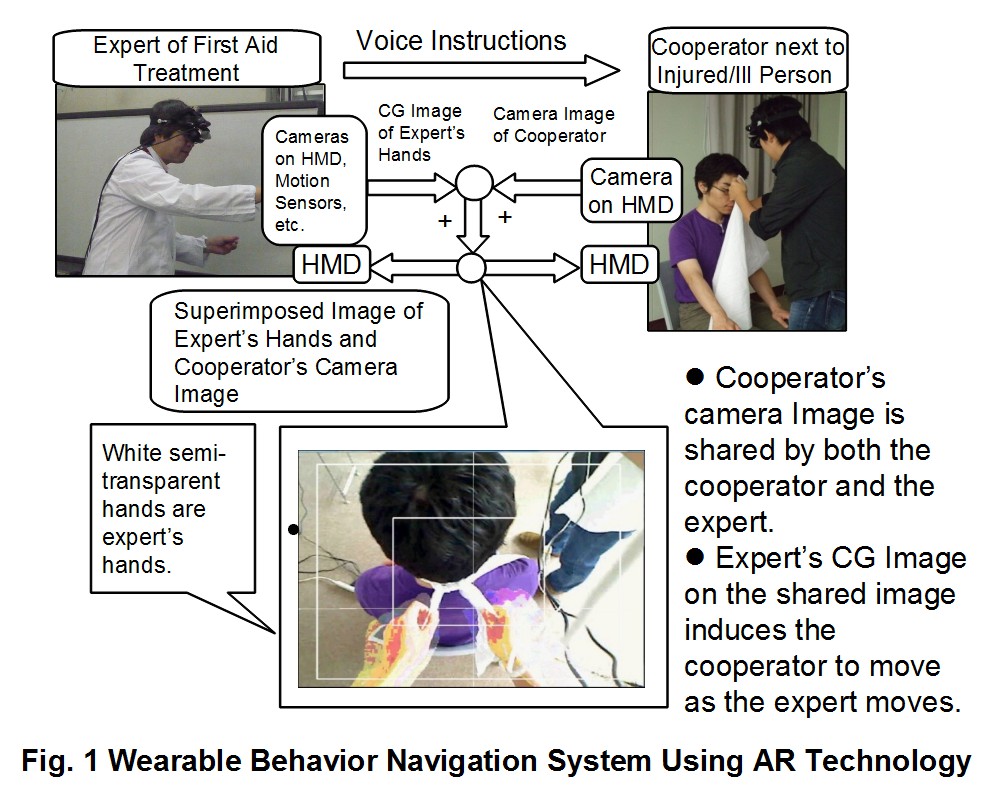

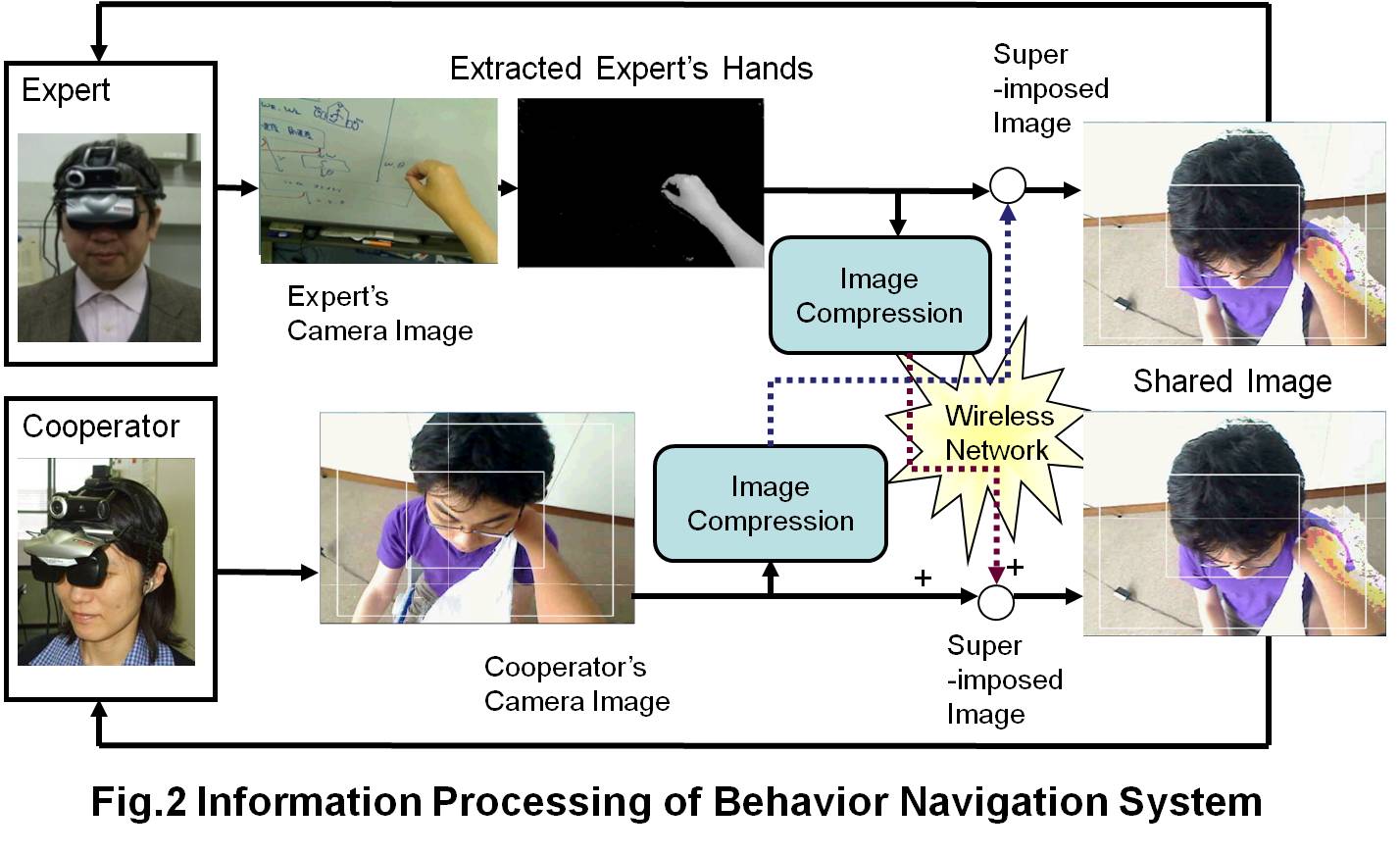

In order to realize general human behavior navigation, we have proposed and developed a behavior navigation system (BNS) using AR/MR technology as shown in Fig.1. The BNS utilizes the feedback of visual information, which is acquired by a cooperator in proximity of the patient and then provided to an expert in a remote site, such as the emergency and critical care center. The head mounted camera of the cooperator can obtain the visual information of the cooperator. The images acquired by the camera are sent to the expert. The expert can understand the status of the injured/ill person by sensory information of the cooperator. The BNSs using AR/MR have the display system, which superimposes the computer generated image of the expert over the real images captured by the cameras on the Head Mounted Display (HMD) of the cooperator. The expert and the cooperator see both the computer generated image of the expert and the real camera image of the cooperator on the screens of the HMDs. The cooperator can directly mimic the movements of the expert. The expert can induce the cooperator to move as the expert moves if the cooperator tries to mimic the movements of the expert. Fig. 2 shows the concrete description of the information processing of the BNS using AR/MR.

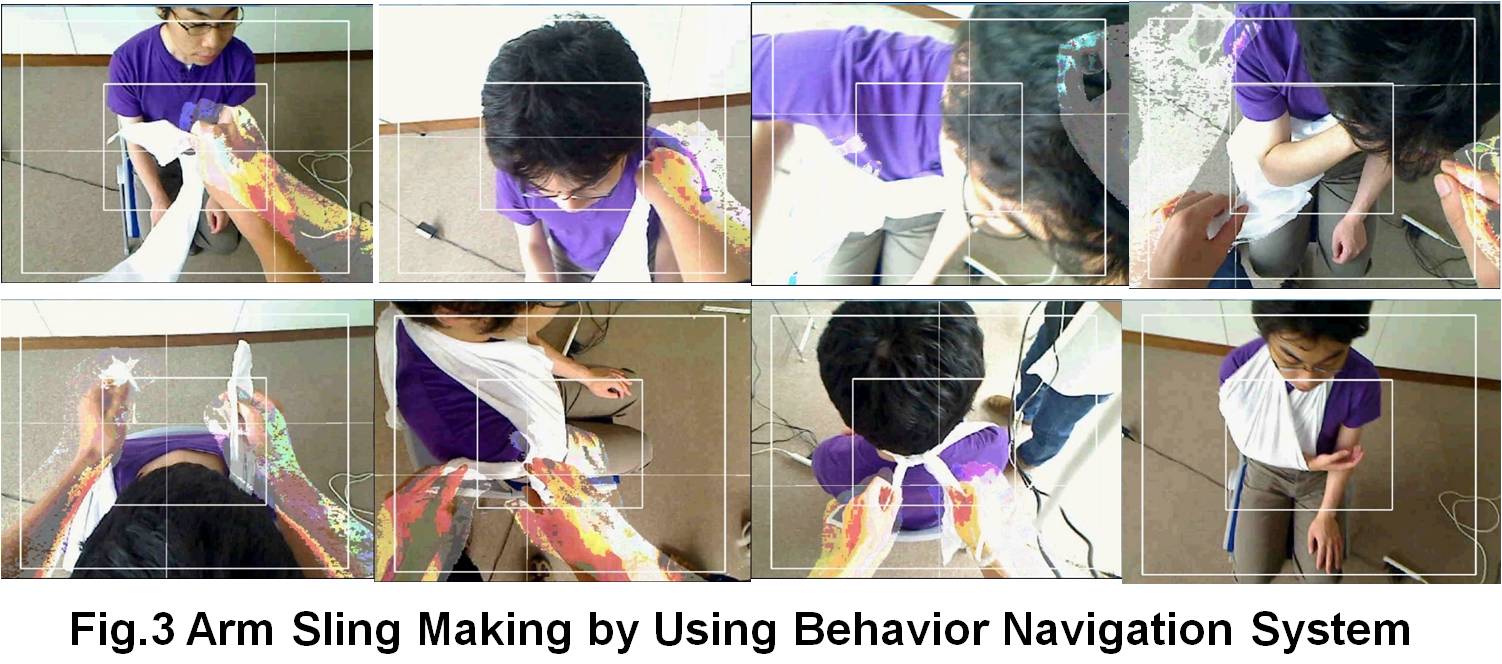

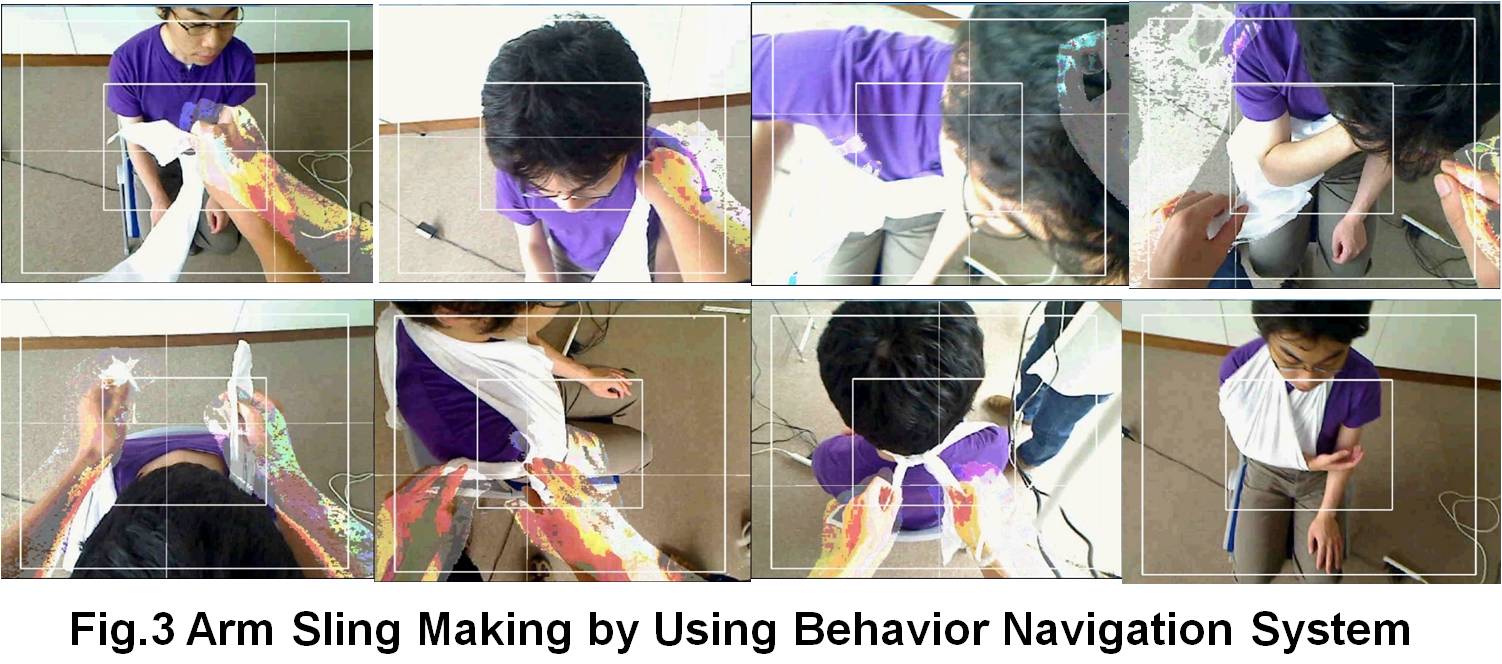

In order to evaluate the effectiveness of the simple PH systems, we have conducted basic experiments, in which we asked 8 subjects, who wear the BNS to make an arm sling for a person injured in his/her fore arm by using a triangular bandage. Fig. 3 shows the superimposed the real image of the cooperator's camera and the CG image of the expert's hands during one experimental trial.

With the BNS, all the subjects successfully complete the treatment.

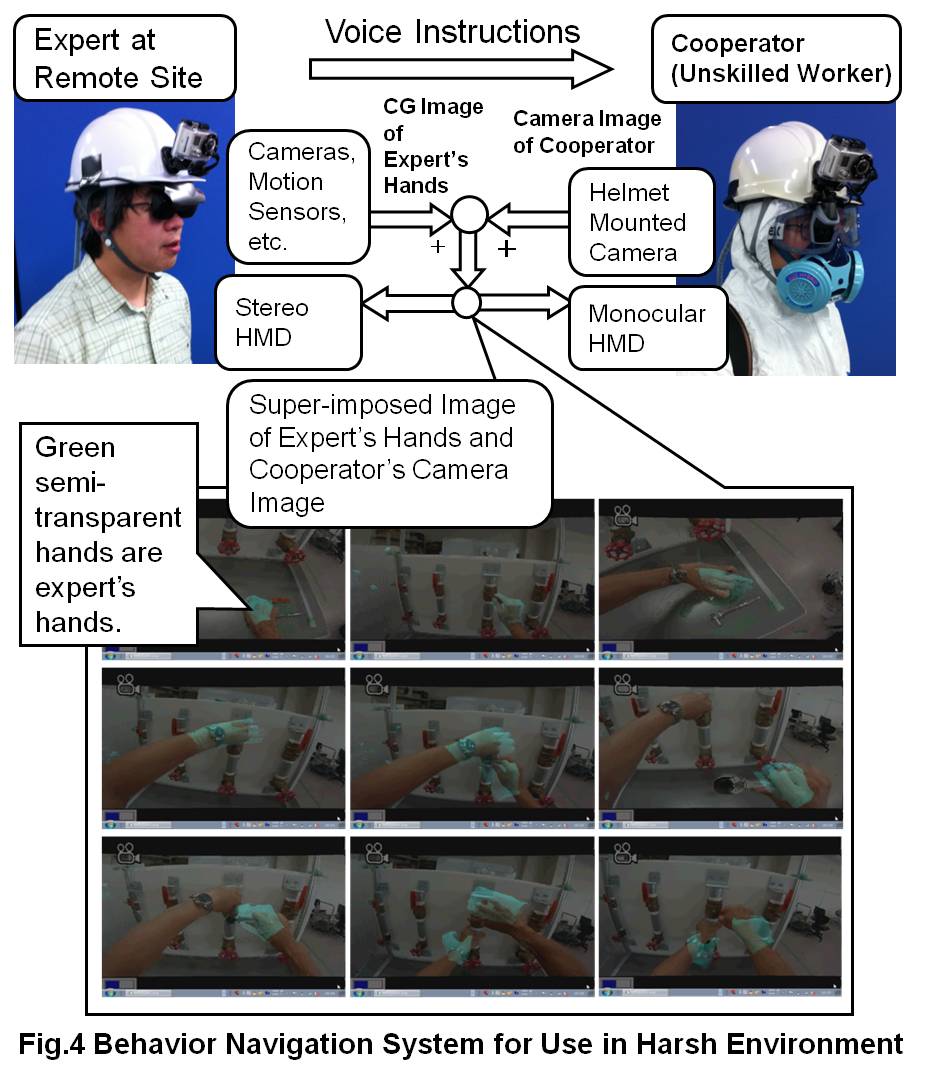

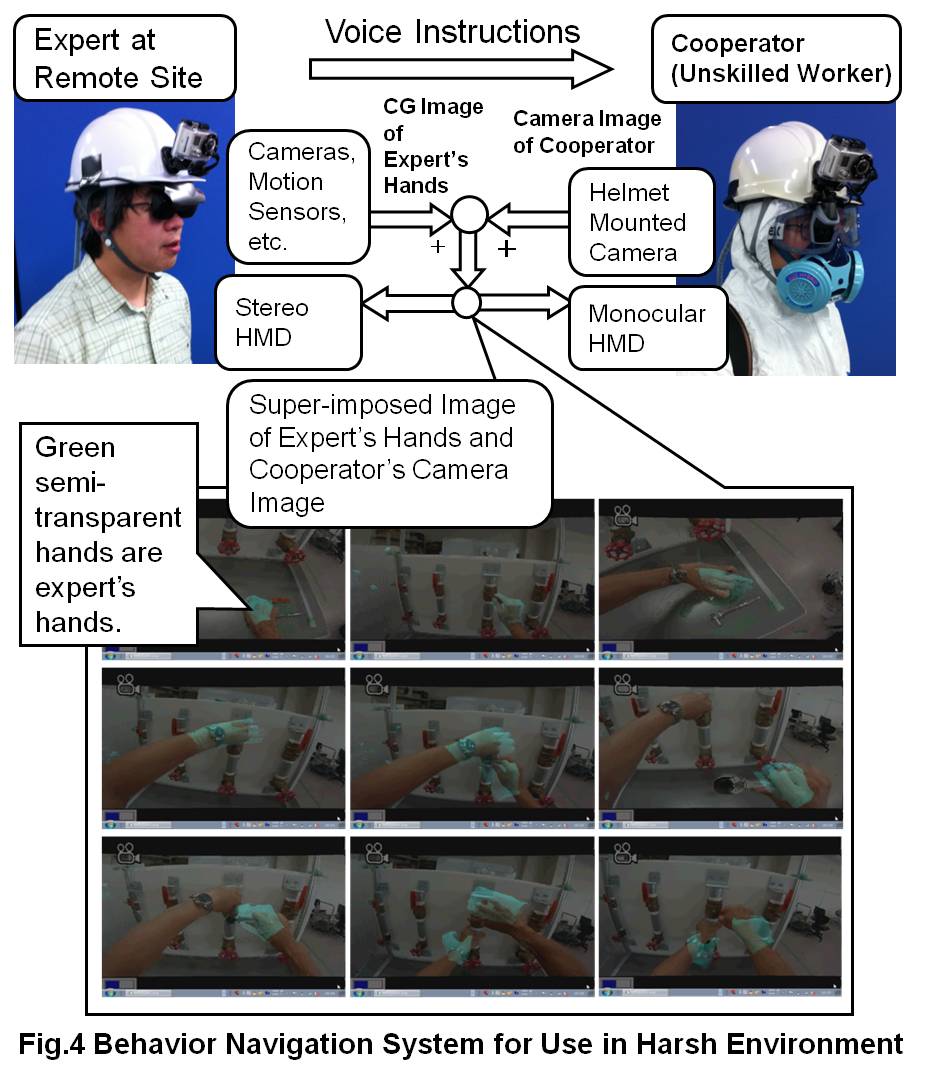

As well as first aid treatments, we believe that the behavior navigation systems can be effective in various tasks in relatively harsh environments, such as factories, construction sites, and areas stricken by large earthquakes or tsunamis. We believe that the Behavior Navigation System improves the performance of the unskilled workers by utilization of the developed skill of the experts in the remote site. We are developing the BNS specifically designed to operate in harsh environments and conditions, such as strong vibrations, extreme temperatures and wet or dusty conditions as shown in Fig. 4.

This video illustrates the Behavior Navigation System for harsh environments.

The following Youtube video illustrates the Behavior Navigation System for harsh environments.

https://www.youtube.com/embed/qwSDKD2mT3A

This research won Netexplo 2012 award.

The research and development of the BNS was supported by JST CREST �$B!H�(BMulti-sensory Communication, Sensing the Environment, and Behavioral Navigation with Networking of Parasitic Humanoids�$B!I�(B by Japan Science and Technology Agency (JST). The leader of the project is Prof. Taro Maeda (Osaka University). The above research is conducted by the collaboration of AIST, Osaka University, Tamagawa University and Ibaraki University.

Papers

E. Oyama, N. Shiroma, N. Watanabe, A. Agah, T. Omori, N. Suzuki, "Behavior Navigation System for Harsh Environments", Advanced Robotics, Vol. 30, Issue 3, pp. , 2016.

http://dx.doi.org/10.1080/01691864.2015.1113888

When the above article was first published online, two in-text citations on page 1 and on page 2 were written incorrectly by Taylor & Francis. The corrected sentences can be found at the following URL.

http://www.tandfonline.com/doi/full/10.1080/01691864.2016.1197564

E. Oyama and N. Shiroma, "Behavior Navigation System for Use in Harsh

Environment", in Proceedings of the 2011 IEEE International Symposium on

Safety,Security and Rescue Robotics Kyoto, Japan, 2011, pp. 272-277.

E. Oyama, N. Watanabe, H. Mikado, H. Araoka, J. Uchida, T. Omori,

K. Shinoda, I. Noda, N. Shiroma, A. Agah, T. Yonemura,

H. Ando, D. Kondo and T. Maeda, "A Study on Wearable Behavior Navigation System (II)

- A Comparative Study on Remote Behavior Navigation Systems for

First-Aid Treatment -", in 19th IEEE International Symposium on Robot and

Human Interactive Communication, Viareggio, Italy, 2010, pp. 808-814.

E. Oyama, N. Watanabe, H. Mikado, H. Araoka, J. Uchida, T. Omori,

K. Shinoda, I. Noda, N. Shiroma, A. Agah, K. Hamada, T. Yonemura,

H. Ando, D. Kondo and T. Maeda, �$B!I�(BA Study on Wearable Behavior

Navigation System - Development of Simple Parasitic Humanoid

System -�$B!I�(B, in 2010 IEEE International Conference on Robotics and

Automation ICRA 2010, Anchorage, USA, 2010, pp. 5315-5321.

to Eimei Oyama's Website